xUnit Testing Guide

This guide is aimed at developers who intend to write unit tests with the xUnit testing tool. It is intentionally kept concise for being used as some kind of cheat sheet.

Starting a new test project

Each project must have an accompanying test project in the tst folder!

To create a new test project change into tst/sub.project.test and run

command:

dotnet new xunit

Update the generated project file to

reference the project to be tested

add test coverage support

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<TargetFramework>net5.0</TargetFramework>

<IsPackable>false</IsPackable>

<CollectCoverage>true</CollectCoverage>

<CoverletOutput>__coverage/lcov.info</CoverletOutput>

<CoverletOutputFormat>lcov</CoverletOutputFormat>

<ExcludeByFile>**/*.g.cs</ExcludeByFile>

</PropertyGroup>

<ItemGroup>

<ProjectReference Include="../../src/My.Server/My.Server.csproj" />

</ItemGroup>

<ItemGroup>

<PackageReference Include="Moq" Version="4.20.*"/>

<PackageReference Include="Microsoft.NET.Test.Sdk" Version="17.1.0" />

<PackageReference Include="xunit" Version="2.4.1" />

<PackageReference Include="xunit.runner.visualstudio" Version="2.4.3">

<IncludeAssets>runtime; build; native; contentfiles; analyzers; buildtransitive</IncludeAssets>

<PrivateAssets>all</PrivateAssets>

</PackageReference>

<PackageReference Include="coverlet.msbuild" Version="6.0.0">

<IncludeAssets>runtime; build; native; contentfiles; analyzers; buildtransitive</IncludeAssets>

<PrivateAssets>all</PrivateAssets>

</PackageReference>

<PackageReference Include="ReportGenerator" Version="5.2.*" PrivateAssets="All"/>

</ItemGroup>

<Target Name="GenerateHtmlCoverageReport" AfterTargets="GenerateCoverageResultAfterTest">

<ReportGenerator ReportFiles="@(CoverletReport)" TargetDirectory="__coverage/html" ReportTypes="HtmlInline_AzurePipelines_Light"/>

</Target>

</Project>

Creating first test(s)

Rename and update the auto-created UnitTest1.cs file.

(Note that test methods neeed to be attributed with [Fact].)

Within a test method use the static methods of the xUnit Assert.

(Unfortunately their does not seem to be an API documentation yet, so

have a look at this cheet sheet:)

Boolean |

Null |

|

|---|---|---|

|

: |

|

|

: |

|

|

: |

|

|

: |

|

Simple Equality |

Reference Equality |

|

|

: |

|

|

: |

|

|

: |

|

Empty enumerables |

IEnumerable contains |

|

|

: |

|

|

: |

|

: |

|

|

Ranges |

Type Equality |

|

|

: |

|

|

: |

|

|

: |

|

Throws |

||

|

: |

|

|

: |

Run tests

Change into your test project and run command:

dotnet test

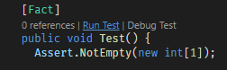

Execute tests from vscode

Simply use the code lenses shown in your test code:

(Note: Exclude test methods with attribute

[Fact(Skip = "Why to exclude..")].)

Setup of test context

Simply use the constructor of a test class to tear-up a test context for

the tests.

If a context tear-down is required add the IDisposable interface to

the test class.

public class UnitTest : IDisposable {

MyContextType myContext;

public UnitTest() { this.myContext= new MyContextType(); /* ... */ }

public void Dispose() { myContext.Dispose(); }

/* ... */

}

(Note: xUnit will create a new instance of your test class with

tear-up before each test method executes and context tear-down after

each test method ends.

Meaning your test methods will all have each a cleanly teared-up

context, BUT it is a new one for each test method!)

Sharing context across test methods

Sometimes test context creation and cleanup can be very expensive. If

you were to run the creation and cleanup code during every test, it

might make the tests slower than acceptable.

xUnit provides so called test fixtures to setup a context to be shared

across all tests of a test class.

public class UnitTest : IClassFixture<UnitTest.MyContextType> {

public class MyContextType : IDisposable {

public void Dispose() {}

}

MyContextType myContext;

public UnitTest(MyContextType myContext) { this.myContext= myContext; /* ... */ }

/* ... */

}

Here the test context is created once by xUnit and then the same instance is passed to the actor of each instance of the test class. When tests are finished the test context gets disposed.

Sharing context across several test classes

Sometimes it is required to share a context among multiple test classes. A test in-memory database would be a great use case: Initialize a database with a set of test data, and then leave that test data in place for use by multiple test classes.

To setup such a shared context we would need to create a xUnit collection fixture:

public class MyShardContext : IDisposable {

public void Dispose() { }

}

[CollectionDefinition("My shared context")]

public class SomeContextCollection : ICollectionFixture<MyShardContext> { /* no code here! */}

/* ... */

Now each test class to share that same test context would look like

this:

(Note: All test classes must have the same title in their

[Collection] attribute.)

/* ... */

[Collection("My shared context")]

public class UnitTest1 { /* tests go here */ }

[Collection("My shared context")]

public class UnitTest2 { /* tests go here */ }

(Also note: Defining test collection like the above can also be used to control which tests are executed in parallel. XUnit v2 will run tests in parallel by default - except for tests within the same defined test collection. Tests within the same test collection will be executed one after another.

Showing diagnostic messages with test runner output

Any messages written to the standard console will typically not show up

with the other xUnit runner output. (This is due to the parallel test

execution...)

To make diagnostic messages appear with the normal test runner output

they need to be written to a specialized TestOutputHelper being passed

to the actor of your test class:

using Xunit;

using Xunit.Abstractions;

public class UnitTest {

ITestOutputHelper tstout;

public UnitTest(ITestOutputHelper tstout) { this.tstout= tstout; /* ... */ }

[Fact]

public void MyTest() {

tstout.WriteLine("My test diagnostic message...");

}

/* ... */

}

Using Mock-Objects to aid with tests.

Mock-objects are great where ever we need to supply our classes/methods

under test with parameters that represent heavy-weight objects like a

IDataStore that normally can only exist in a broader context (like an

entire server).

To simulate real instances of such objects we could create mock-objects

using some Mock framework.

(We will use moq4 here.)

Lets consider we want to test a method that takes a repository of

persistent objects as a parameter like:

public int GetInterestingProceduresCount(IRepo<Data.Entity.ProcedureTemplate> procedureRepo);

We also know that this method is going to perform some querying for

procedures, i.e. the method under test will obtain a IQueryable from

the repository.

So we setup the mock-object to simulate such a IRepo<>:

var repoMock= new Mock<IRepo<Data.Entity.ProcedureTemplate>>();

Then we define some very basic behavior simulation of that IRepo<>:

repoMock.Setup(ptRepo => ptRepo.AllUntracked)

.Returns(Data.Entity.ProcedureTemplate.DefaultProcedureTemplates.AsQueryable());

Now we can pass an instance of the mocked repository into our method to be tested:

var cnt= obj.GetInterestingProceduresCount(repoMock.Object);

Assert.True(cnt > 3);

Advanced IRepo<> repository mocking

Now that we have our repoMock let's assume we want to test this

method: obj.GetInterestingByKey("some_key");.

Also internally the method GetInterestingByKey() will do something

like:

return myRepo.AllUntracked.Where(entity => entity.Key == key)

.LoadRelated(myRepo.Store, p => p.Partner)

.SingleOrDefault();

In order to get the (extension method) LoadRelated() work with our

mocked property AllUntracked we also need to mock the internal store

of the repo:

var prodQuery= new List<ProductDoc> { this.prod001 }.AsQueryable();

var prodPartnerQuery= new EagerLoadedQueryable<ProductDoc, Partner>(prodQuery);

var storeMock= new Mock<IDataStore>();

storeMock.Setup(s => s.LoadRelated<ProductDoc, Partner>(It.IsAny<IQueryable<ProductDoc>>(), It.IsAny<Expression<Func<ProductDoc, Partner>>>()))

.Returns(prodPartnerQuery);

prodRepoMock.Setup(repo => repo.Store)

.Returns(storeMock.Object);

prodRepoMock.Setup(repo => repo.AllUntracked)

.Returns(prodQuery);

Finally to make this work wee need a fake implementation of the

IEagerLoadedQueryable<E, P> interface returned from

store.LoadRelated():

private class EagerLoadedQueryable<E, P> : IEagerLoadedQueryable<E, P> {

IQueryable<E> q;

public EagerLoadedQueryable(IQueryable<E> q) { this.q = q; }

public Expression Expression => q.Expression;

public Type ElementType => q.ElementType;

public IQueryProvider Provider => q.Provider;

public IEnumerator<E> GetEnumerator() => q.GetEnumerator();

System.Collections.IEnumerator System.Collections.IEnumerable.GetEnumerator() => GetEnumerator();

}

Manage Parallel Test Execution

The parallelism of the test execution depends on the test runner configuration and the available CPU cores. If tests depend on the state of mutating shared objects this could lead into race condition problems.

Tests of one test class are never run in parallel, So tests executed from the IDE via code lens would not show such race condition problems.

Tests being run from a CI pipeline get typically executed with reduced CPU resources and thus show little effects from parallelism.

Locally running tests with

dotnet testare most likely to reveal any race condition problems.

Tests across multiple test classes accessing shared state (like:

App.ServiceProv) should be locked from parallel test execution. This

can be done by marking these test classes with a common

[Collection("App.ServiceProv")] attribute.

(See also: Running Tests in

Parallel)

Debugging

Most of the test debugging could be performed nicely by using the IDE

code lens: Debug Test.

But some problems might only appear with the command line dotnet test

execution. This makes running this in a debugger somewhat unwieldy.

This could be one approach:

Temporarily add a hard (coded) breakpoint somewhere in your code:

Tlabs.Config.DbgHelper.HardBreak();Start your tests with

dotnet testand than attach a debugger to the testhost.exe process,

(This should show your test stopped at a breakpoint from where you could continue to debug.)